Lab Automated Build & Deploy: An Exercise in Pain.

Well firstly apologies for anyone who was hanging onto my every word and waiting for this follow up post to my previous article.

I was so terribly busy at the office (with the project this exercise pertained to) that I never had time to finish the automated build deploy I was working on in that last blog post. Or document it when I did. So here it is, and I apologise for the lateness of it.

The original title of this post was Lab Automated Build & Deploy: A Novice Attempt, however after finally crossing the t’s and dotting the i’s I can assure you that the current title was quite apt.

Got there in the end, but for my first “rodeo” I sure picked a corker :)

Step 1: The Environment

At Black Marble we're quite adept at utilising Microsoft Test Manager Lab Management to procure VM's for our development and testing cycle, I had a sterile 4 box test rig sitting in the Library which I could roll out as I pleased.

So to ensure our deployment was always on a sterile environment I rolled out a new one. It consisted of a DC, SQL Server, SharePoint2013 server and a CRM server. I then made sure that I put any settings in that I couldn't easily do through PowerShell and then took a snap shot of the environment using MTM's Environment Viewer.

Gotcha: I had to amend my snapshot later to include the PowerShell setting set-executionpolicy unrestricted, as most of our deployment scripts were PowerShell based. For some reason this didn't seem to reliably take from the deployment scripts themselves.

**Gotcha: My first run of the process utterly rodded the environment because the snapshot was dodgy, IT had to go into SCVMM to manually restore the environment to a precursor snapshot. Something lab couldn’t do as it reckoned the entire snapshot tree was in an unusable state. This happened every time I tried rolling the environment back to it’s snapshot. Evidently taking the environment snapshot is a delicate process at times. While taking a snapshot don’t interrupt your lab manager environment viewer session. IT swear blindly this can cause behind the scenes shenanigans to occur. In the end we took a new snapshot.

Gotcha: The second run of the process with the new snapshot failed! In IT’s words lab manager is a touch daft, it fires off all snapshot reversion processes to SCVMM at once, that’s 4 jobs, if any one of them fail it counts the entire lot as a failure. The reason in our case was we had too many Virtual Machines running on our tin and the snapshot process was timing out because of low resources. We moved some stuff into the VM Library and turned/paused everything else we could that wasn’t needed.**

For those last 2 Gotchas, and the tale that followed on from them, see my first post Lab & SCVMM – They go together like a horse and carriage for more information on this. Probably also worth noting a patch for SCVMM around the time dramatically improved it’s stability when performing large numbers of operations.

With an environment in a mostly ready state I started working on my Build. The default Lab Deploy build template found in VS2012 runs as a post build action, you can either specify an existing/completed build, or queue a new one from another pre-defined build definition. I chose the latter as I wanted ideally the development teams and myself to have most recent versions of the product in test every morning.

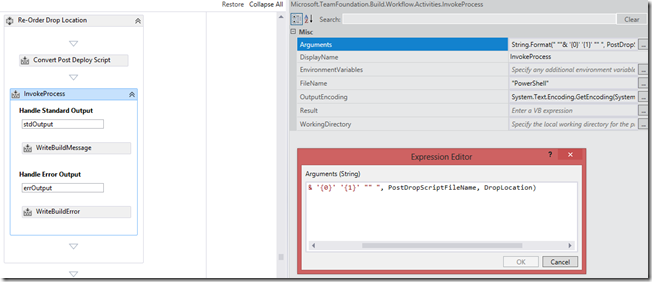

All I did to start with was clone our C.I build. I customized the build template used for this build to accept a file path to a PowerShell script file, which it would then execute post drop. I was able to use the DropLocation parameter in the build definition workflow with some string manipulation to invoke the PowerShell script file which isincluded in the project in every build.

_My Re order drop location process, firstly I convert the Workspace mapping of the Master post build script to a server mapping. I then use some string.Format shenanigans to construct the full file path and arguments (taking the Drop location created for me already by the template) to the to the Post Build Master script. Which I then use when I use an Invoke process workflow item to fire up Power Shell on the build server/agent!.

_

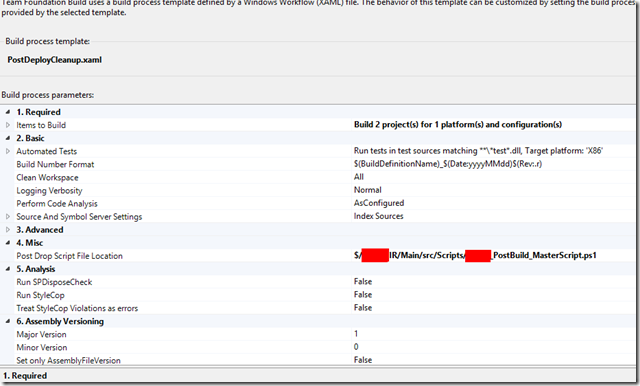

Passing the Post Drop script file location as it exists in a workspace. I had to do this because the existing post script section has no Macro for the drop location which my scripts NEEDED.

I probably could have used the Post Drop PowerShell Script File and arguments section in the Build process if not for that at the time I had no way of getting hold of the drops location parameter ( and as our drop folders are all version stamped they change build for build). Probably could have done it through some MS build wizardry but that's hindsight for you.

The PowerShell file well was really just a collection of Robocopy commands to shuffle around the drops folder into something resembling where my deployment scripts would be want to find things. Basically making my life easier when I wanted to copy the deployable files from the drops location to each machine in the environment. You could just as easily use another command line copy tool (or do it using basic copy commands).

This was for the most part a walk in the park.

What came next was a royal headache.

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------

2.0 The Lab Build

This part of the problem would have been made a lot easier had my understanding of how Lab Management and TFS Build string together when deploying to a Lab environment.

As I now understand it here is the sequence of events

Your base build starts, this is kicked off by the build controller and delegated to the relevant build agent. Your base build post build scripts are run by the TFSBuild service account on the build agent itself.

Your Lab Build starts, this runs on the build agent as normal, your deployment scripts are run from the machines in the environment you designate in the process wizard.

I initially thought they were run by the Visual Studio Test agent on that machine (oh how wrong I was), which is typically an account with admin rights. Your scripts can get here from the build location by some back door voodoo magic between TFS and Lab Management which ignores petty things like cross domain access and authentication! Not that I used this, I did it the hard way.

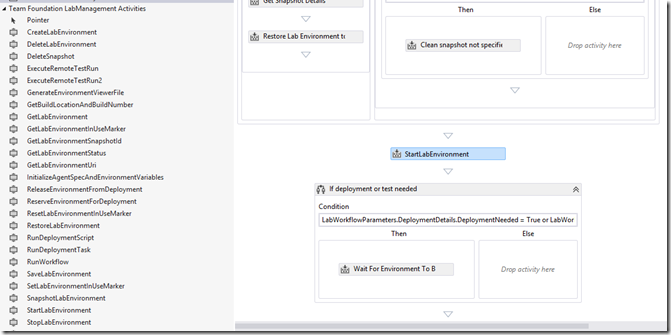

**Gotcha! I found was that reverting to a snapshot of the environment in which it was turned off. Our IT have a thing about stateful snapshots and memory usage, so naturally all my snapshots were stateless (i.e the environment was turned off!). This has the logical repercussion that meant everything that came after the base build failed spectacularly. This was a relatively easy fix, modifying the lab deploy build process template to include an additional step where it turned the Environment back on. It’s kind of nice in that it waits for the lab isolation to finish configuring before it attempts to run any scripts.

For the record, not a custom workflow activity, it’s in there already.**

So I now had a set of scripts that development had written to set the solution up, I wrapped these in another set of scripts specific to each environment that would "pull" the deployables from the drops location down to each machine (where the developer scripts were expecting them). Oh but here was a real big problem, these scripts were running as the domain admin of the test environment, which was on an entirely different domain to where our drops location was!

There was no way I was going to be allowed or able to turn this environment into a trusted domain, and I couldn't seem to change the identity of the test agents either. No matter what I did in the UI they seemed intent on running as NT Authority.

At this point there were two avenues of work available to me. I could execute all the Robocopy PS scripts from the build process template taking advantage of the previously mentioned voodoo magic. This still didn’t get me around the deployment problem given the scripts (by judicious use of whoami) weren't running as the accounts they needed to.

My second option was use Net Use, and map the drop location as a drive, as I could provide credentials in the script.

I opted for the latter which in hindsight was the more difficult option. My decision not to use the first option was helped by the interface in the process editor for entering the scripts is an abomination in the eyes of good UI design.

I noticed almost immediately that the copy scripts were working in some cases and not in others when trying to pull my files down. Some detective work on what was different between the files that were being copied and the files that weren’t yielded the Gotcha! below.

Gotcha!: Robocopy here was not my friend! I had been using the /Copyall switch on my Robocopy commands, which is equivalent to /copy + all :args, the audit information required higher security privileges than what were available to the account executing the copy script (which was TFSLab at this moment in time, the credentials we supplied for net-use in creating the network share). Omitting /Copyall and using just /copy:d solved the problem. Additionally Robocopy does not replace files if they exist already. So either use a blank snapshot (where the files have never been copied down before) or ensure your scripts can delete the files in place if you need to. I used Robocopy's /PURGE switch to mirror (and recursively delete) a blank folder created via MKDIR.

So now we had all the deployables pulled down correctly to their correct machines. So it should have been as simple as executing the child scripts.

Nobody except the CRM box was playing ball…grrr.

--------------------------------------------------------------------------------------------------------------------------------------------------------------------

3.0 SharePoint & SQL deployment

So the problems here were that operations performed on these two machines required specific users with specific access rights to perform their actions. I needed to execute my scripts as SharePoint Admin on the SharePoint Server and as the Domain Admin on the SQL Server.

For love nor money could I get the test agents to execute the scripts as anything other than NT System. I tried all sorts of dastardly things, like switching the Powershell execution context using Enter-PSSession (which I don’t recommend doing for long as TFS Build loses all ability to log the deployment once you execute the session switch) to adding NT System to some Security Groups it really shouldn’t be in.

All in all I didn’t like either of these solutions (though had I pursued them fully I’m sure they would have worked).

They were horrible, the first solution meant I could not easily debug the process as I had no built in logging, and also meant I had hard coded security credentials in my deployment scripts.

The second option is such bad practice that if my IT department saw what I did with the NT System account they’d shoot me on sight because it really is **very very bad practice.

**

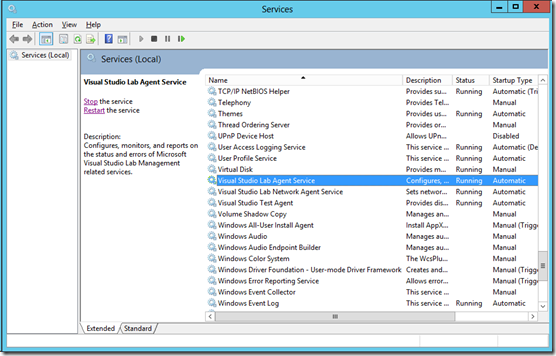

I was mulling it over until one day one of my more learned colleagues (the mighty “Boris”) clued me in that the Test Agent UI would execute the scripts if, and only if I was using it as an interactive process, not as a service. What I actually needed to change was the LAB AGENT service account, important distinction here. The Lab Agent is separate to the Test Agent, and has no configuration tool unlike the test agent.

_Note the little blighter in the image. It’s this you have to change to provide a different execution account to your scripts, and it’s easy enough to do (right click Properties > Log On and provide the account).

_

Comprehension dawned. (FYI I used the latter)

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------

4.0 Result!

And suddenly everything started coming together, I had a couple of niggles here and there, scripts that had the odd wrong parameter etc.

But overall the process now worked.

So end to end. My first Lab Deploy build goes a little like this.

LabBaseBuild runs, this is a customized workflow that as a post build action takes the drop location parameter generated during our normal CI build workload, and passes it to a PowerShell script that wraps around 3 child scripts. These child scripts move the CRM, SQL and SharePoint deployables into a mirror of the folder structures the developer scripts are expecting.

The Lab Deploy build then kicks off. This is a customized workflow that includes an environment start step after applying a stateless Snapshot to the environment. The build then runs 3 Scripts

LabAgentDeployScript-CRM

LabAgentDeployScript-SQL

LabAgentDeployScript-SP

Each of these scripts is passed the build drop location as a parameter, maps this location using Net Use (using the lab service account’s credentials as it’s quite Security Permission light, this allows us to jump across from the test domain to our black marble domain).

The scripts first pull down the folders they need from the drops location using good old Robocopy.

Each script then calls the deployment script for each feature.

CRM uses a custom .exe that calls into the CRM SDK for importing and publishing a CRM Solution, uploading reporting services reports and importing/assigning user security roles.

The SQL script applies a DACPAC to a specific database, imports a new set of SSIS packages (ispac files), calls to an external data source to update a database contents with the clients application specific data (which updates on a daily basis).

It then Truncates a load of transaction logs, and performs a DB Shrink, then executes our SSIS packages and imports up to 92 million + rows of data.

The SP script upgrades a set of WSP’s, activates the new feature for a site collection, configures a SharePoint search business data connectivity application, configures a SharePoint search service application and executes an indexing of the Application DB on the SQL server.

And optionally rolls out the entire scripted deployment of a Site Collection and a Test site with test user accounts already set up.

The Build then, as it is, ends.

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------

5.0 In Conclusion

To say I learnt a lot doing this would be an understatement, but I could have picked a far easier project to do my first build deploy.

I think for any first timer using this technology is correctly interpreting the way in which the various components of the build process talk to one another is key!

Basically to do it right first time you need to know what does what, and who is it doing it as :)

Thanks for reading.