Our TFS Lab Management Infrastructure

After my session Techorama last week I have been asked some questions over how we built our TFS Lab Management infrastructure. Well here is a bit more detail, thanks to Rik for helping correcting what I had misremembered and providing much of the detail.

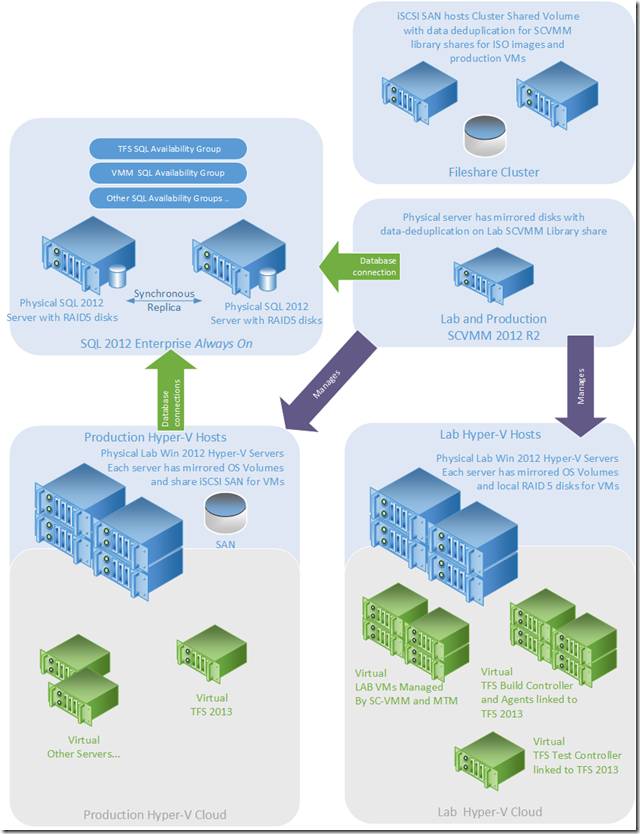

For SQL we have two physical servers with Intel processors. Each has a pair of mirrored disks for the OS and RAID5 group of disks for data. We use SQL 2012 Enterprise Always On for replication to keep the DBs in sync. The servers are part of a Windows cluster (needed for Always On) and we use a VM to give a third server in the witness role. This is hosted on a production Hyper-V cloud. We have a number of availability groups on this platform, basically one per service we run. This allows us to split the read/write load between the two servers (unless they have failed over to a single box). If we had only one availability group for all the DBs one node would being all the read/write and the other read only, so not that balanced.

SCVMM runs on a physical server with a pair of hardware-mirrored 2Tb disks for 2Tb of storage. That’s split into two partitions, as you can’t use data de-duplication on the OS volume of Windows. This allows us to have something like 5Tb of Lab VM images stored on the SCVMM library share that’s hosted on the SCVMM server. This share is for lab management use only.

We also have two physical servers that make up a Windows Cluster with a Cluster Shared Volume on an iSCSI SANs. This hosts a number of SCVMM libraries for ISO Images, Production VM Images and test stuff. Data de-duplication again is giving us an 80% space saving on the SAN (ISO images of OS’ and VHDs of installed OS’ dedupe _really_ well)

Our Lab cloud currently has three AMD based servers. They use the same disk setup as the SQL boxes, with a mirrored pair for OS and RAID5 for VM storage.

Our Production Hyper-V also has three servers, but this time in a Windows Cluster using a Cluster Shared Volume on our other iSCSI SAN for VM storage so it can do automated failover of VMs.

Each of the SQL servers, SCVMM servers and Lab Hyper-V servers uses Windows Server 2012 R2 NIC teaming to combine 2 x 1Gbit NICs which gives us better throughput and failover. The lab servers have one team for VM traffic and one team for the hyper-v management that is used when deploying VMs. That means we can push VMs around as fast as the disks will push data in either direction, pretty much, without needed expensive 10Gbit Ethernet.

So I hope that answers any questions.