The Issue

In Azure DevOps Pipelines there is a feature to dynamically configure the build number e.g. at the top of a YAML build add the following to get a version in the form 1.2.25123.1

name: $(major).$(minor).$(year:YY)$(dayofyear).$(rev:r)Major&Minorare user created and managed variablesYear,Dayofyear …

Read MoreIntroduction

Over the years, I have been involved in numerous migrations of TFS/Azure DevOps Server from on-premises servers to the cloud hosted Azure DevOps Services using the Microsoft Azure DevOps Data Migration Tool. As long as you carefully followed the instructions, the process was relatively straightforward.

I …

Read MoreIntroduction

I got asked today by a client if there was a way to automate the exporting Azure DevOps Test Plans to Excel files. They knew they could do it manually via the Azure DevOps UI, but had a lot of Test Plans to export and wanted to automate the process.

The Options

I considered a few options:

- TCM CLI - This …

Read MoreThere has been much talk at the Microsoft Build conference of the new agentic world, where you get AI agents to perform tasks on your behalf. This could be in your Enterprise applications, but also in the DevOps process that you use to create these new AI aware applications.

This can all seem a bit ‘in the …

Read MoreIntroduction

I have recently been swapping some Azure DevOps Pipelines to GitHub Actions as part of a large GitHub Enterprise migration. The primary tool I have been using for this is GitHub Copilot in the new Agent Mode

Frankly, Copilot is like magic, it is amazing how close it gets to a valid solution. I say this …

Read MoreI have been doing some work one a Logic Apps that routes its traffic out via a vNet and accesses its underlying Storage Account via private endpoints. ‘Nothing that special in that configuration’ I hear you saying, but I did manage to confuse myself whilst testing.

After changing my configuration of the …

Read MoreThe Problem

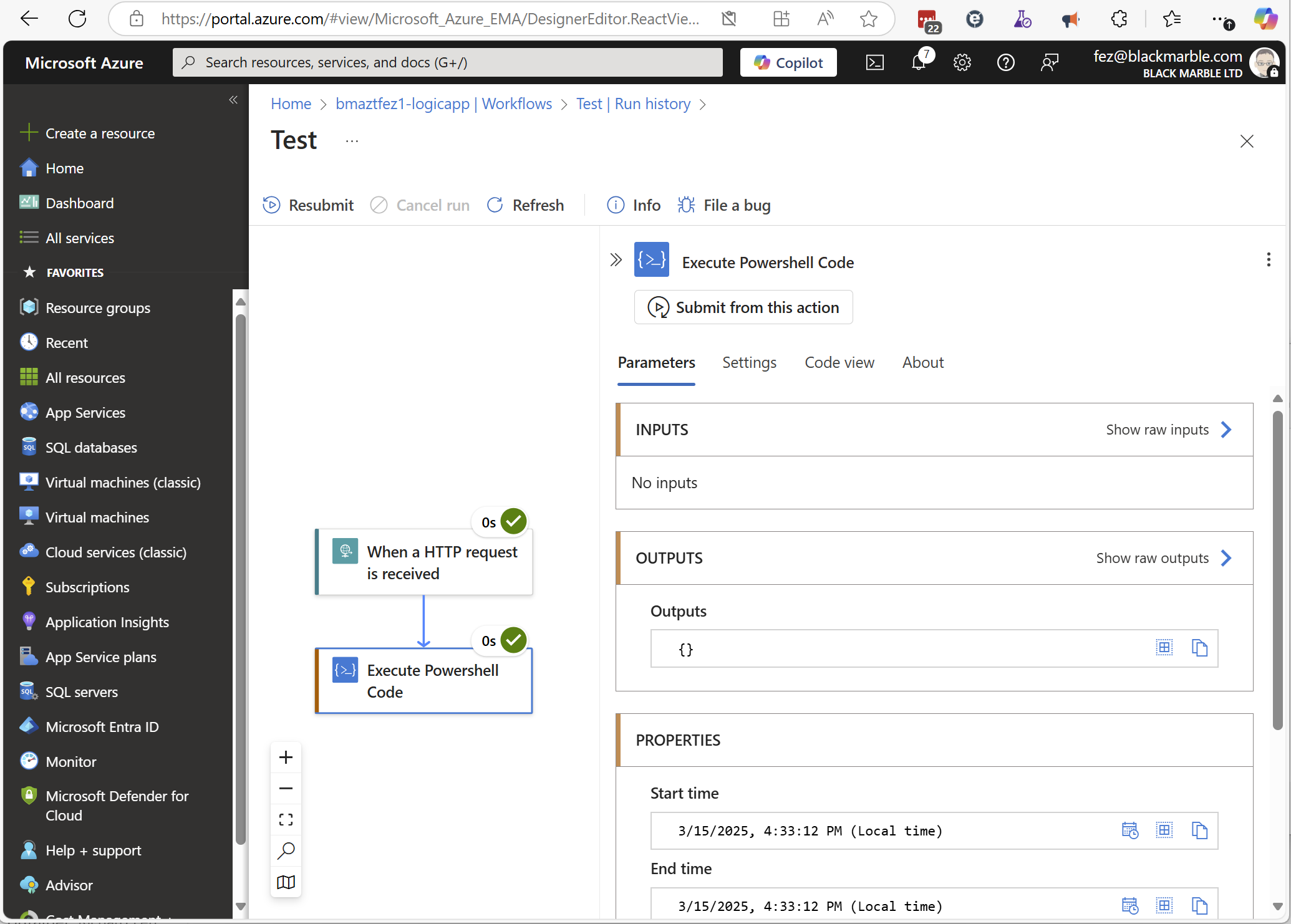

I recently was debugging an Execute PowerShell Code Logic App action, so wanted to see it’s output. However, when I reviewed the run history, the output for my code action was empty.

The Cause (and Solution)

The problem turned out to be that the Logic App’s Inbound traffic configuration. You …

Read MoreThe Problem

I recently had the need in an Azure Logic App to read a CSV file from an Azure Storage account, parse the file, and then process the data row by row.

Unfortunately, there is no built-in action in Logic Apps to parse CSV files.

So as to avoid having to write an Azure function, or use a number of slow, low …

Read MoreSince moving house a couple of years ago, I have gained an ever increasing set of apps on my phone to manage various things, such the Solaredge PV and battery system we installed and our Octopus Energy account, to name but two.

In the past, I had avoided IOT and the ‘Intelligent Home’ as I did not want to …

Read MoreEdited: 4th Feb 2025 to add detail on Azure DevOps Pipelines Service Connection

Introduction

When automating administration tasks via scripts in Azure DevOps in the past I would have commonly used the Azure DevOps REST API. However, today I tend to favour the Azure DevOps CLI. The reason for this is that the CLI …

Read More