Background

The Bicep existing keyword is a powerful capability that allows us to reference a resource that wasn’t deployed as part of the current Bicep file.

One of the typical use cases that I often see is where a resource is deployed as part of a module called by the parent template, the resource that was …

Read MoreI have been doing some work one a Logic Apps that routes its traffic out via a vNet and accesses its underlying Storage Account via private endpoints. ‘Nothing that special in that configuration’ I hear you saying, but I did manage to confuse myself whilst testing.

After changing my configuration of the …

Read MoreThe Problem

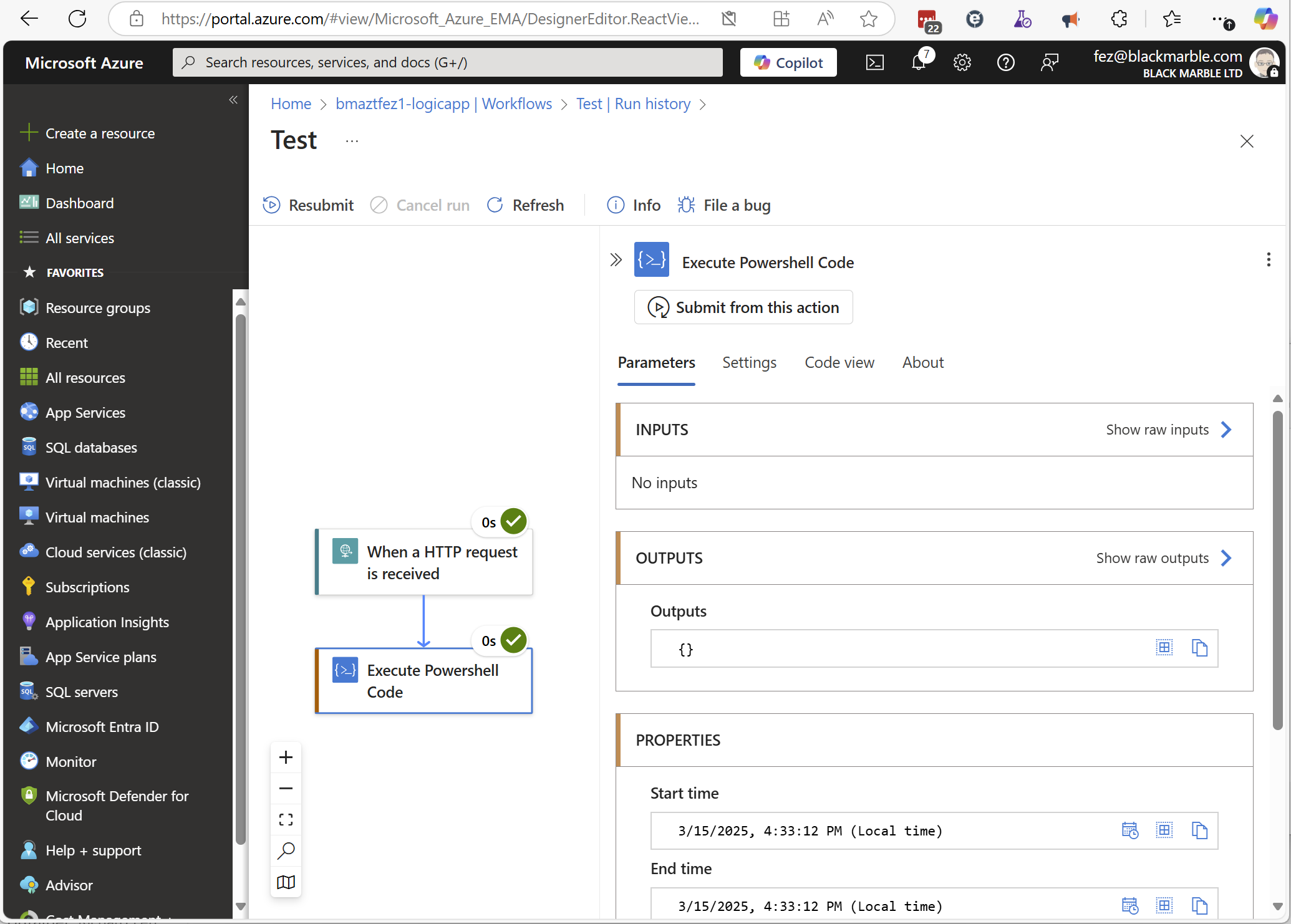

I recently was debugging an Execute PowerShell Code Logic App action, so wanted to see it’s output. However, when I reviewed the run history, the output for my code action was empty.

The Cause (and Solution)

The problem turned out to be that the Logic App’s Inbound traffic configuration. You …

Read MoreThe Problem

I recently had the need in an Azure Logic App to read a CSV file from an Azure Storage account, parse the file, and then process the data row by row.

Unfortunately, there is no built-in action in Logic Apps to parse CSV files.

So as to avoid having to write an Azure function, or use a number of slow, low …

Read Morelewiswrites http://lewispen.github.io/posts/fixing-rss-feed-location/ -

When using GitHub Pages to host your blog, you might encounter an issue where the RSS feed is not located where you expect it to be. This can be particularly problematic if you want your blog to be syndicated and need the RSS feed to be in a …

Read MoreOverview

In previous articles I have subtly referenced risks and best practices regarding HTTP triggered workflows and their use of Access Keys for security, such as:

Some Potential Risks:

- If a Key is leaked, it can be used by anyone who obtains it to call your Logic App Workflow.

- If a Key has expired or been …

Read MoreSince moving house a couple of years ago, I have gained an ever increasing set of apps on my phone to manage various things, such the Solaredge PV and battery system we installed and our Octopus Energy account, to name but two.

In the past, I had avoided IOT and the ‘Intelligent Home’ as I did not want to …

Read MoreEdited: 4th Feb 2025 to add detail on Azure DevOps Pipelines Service Connection

Introduction

When automating administration tasks via scripts in Azure DevOps in the past I would have commonly used the Azure DevOps REST API. However, today I tend to favour the Azure DevOps CLI. The reason for this is that the CLI …

Read MoreThe Issue

The Azure DevOps CLI command az boards work-item update can take a list of fields as a set if value pairs e.g.

az boards work-item update --id 123 --fields Microsoft.VSTS.Scheduling.Effort=10However, if you try to replace the field name with a variable in PowerShell like this

$fieldname = …

Read MoreBackground

I recently wrote about the changes I had had to make to our Azure DevOps pipelines to address the changes required when code signing with a new DigiCert certificate due to new private key storage requirements for Code Signing certificates

Today I had to do the same for a GitHub Actions pipeline. The process …

Read More